LLM as Judge

Providing clear and insightful feedback is crucial for improving conversation quality and maintaining high standards in AI-customer interactions. We use fine-tuned LLMs to generate high-quality feedback on the success of the voice-based interaction with the AI assistant based on various criteria (LLM as Judge).

Why LLM as Judge is useful

1. Great feedback for prompt optimization:

Provides valuable insights to refine prompts effectively.

2. Monitor quality of calls:

Helps ensure consistent and high-quality interactions during client calls.

Judge Criteria

| Feedback Criteria | Answers the question / Content. |

|---|---|

| Persona | Question: Does the agent talk about itself (its persona) as defined in its prompt? |

| Style | Question: Is the style of the conversation consistent with the prompt (tone, wording like professional or casual, etc.)? |

| Steps | Question: Does the agent follow the steps outlined in the prompt and in the correct order? |

| No Repetition | Question: Does the agent avoid unnecessary repetition unless requested by the human? |

| Objections | Question: Does the agent handle objections as defined in the prompt? |

| Objection Not Defined | Question: How does the agent handle objections not mentioned in the prompt? |

| Knowledge | Question: Does the agent use relevant knowledge beyond what's in its prompt if needed? |

| Goal Achievement | Question: Did the agent achieve the call objective specified in its prompt? |

| Appointment Handling | Question: If the user wants to book an appointment, are date, time, and timezone handled correctly? |

| User Sentiment | Question: Was the human sentiment positive during the call? |

| Agent Sentiment | Question: Was the agent sentiment positive during the call? |

| Call Completion | Question: Did the call end with a proper goodbye instead of being cut off? |

| Answered by Human | Question: Was the call answered by a human? |

| Do not Call | Question: Was the call flag as "Do not Call" |

| Call Summary | Concise summary of the call (max 500 characters). |

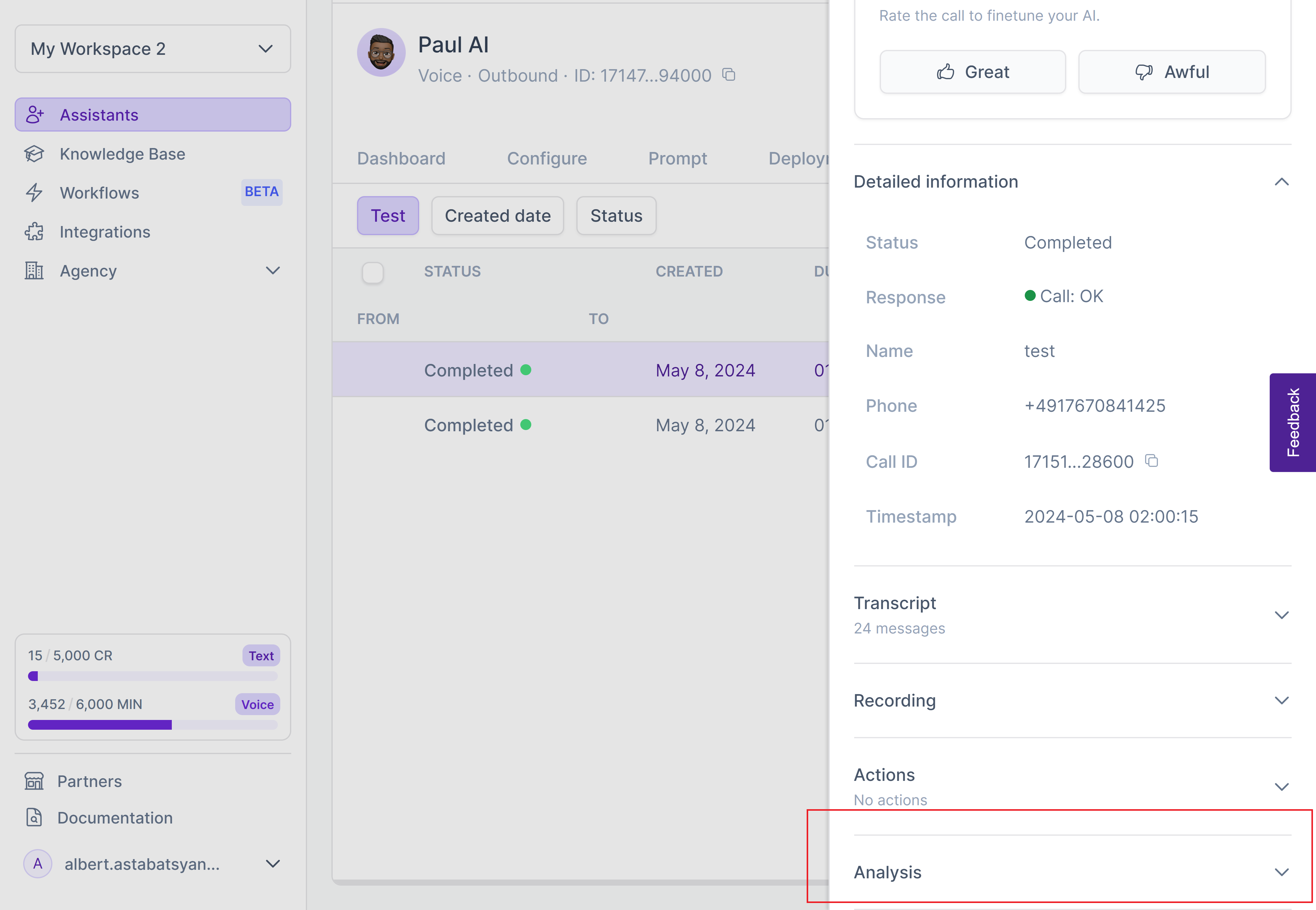

LLM as Judge data is listed for each individual call after it's completion in the call logs of the AI assistant.

Updated 2 months ago